Published by AstroAwani, MySinchew, image by AstroAwani.

In today’s tech-driven world, state security forces face escalating challenges from violent extremism and terrorism (VE&T) exploiting cutting-edge technologies. Countering online VE&T demands navigating complex, data-intensive environments often beyond their expertise, yet efforts are constrained by operational gaps, limited capacity, and a rapidly evolving digital landscape.

Artificial Intelligence (AI) has emerged as a vital tool in combating VE&T, excelling in decision-making, language translation, and pattern recognition (UNOCT & UNICRI, 2021). Its capacity to quickly analyse vast datasets and detect patterns far exceeds human capabilities, offering critical advantages.

Emir Research’s systematic analysis in “Global P/CVE Trends: A Roadmap for Malaysia’s Resilience” emphasises the global best practice of integrating intelligence, evidence-based strategies, and advanced technology in Preventing and Countering Violent Extremism (P/CVE). However, in ASEAN, nations like Malaysia and Thailand often rely on traditional militaristic methods to address VE&T. Progress in AI governance frameworks remains slow, hindered by the absence of national regulations and regional cooperation (Wan Rosli, 2024), despite UN calls for a more robust AI oversight.

Terrorist groups often outpace governments in leveraging unregulated AI technologies, heightening VE&T threats and undermining P/CVE efforts (Lakomy, 2023). By exploiting AI to enhance operations, expand reach, and evade detection, VE&T actors gain a significant advantage. Falling even a step behind allows them to innovate unchecked, exacerbating the threat and widening the gap between their capabilities and state-led countermeasures.

Surprising AI uses by VE&T actors

Initially infamous for blending celebrity faces with pornographic content (UNOCT & UNICRI, 2021), deepfakes powered by generative adversarial networks (GAN) are now being weaponised by terrorists to spread disinformation, damage reputations, and facilitate extortion, blackmail, and harassment. These tactics fuel social unrest and political instability (Bamsey & Montasari, 2023). The surge in misinformation and disinformation heightens fear and anxiety, causing severe psychological effects on public morale and counter-terrorism agents—key objectives of VE&T actors (Akwen et al., 2020; Ismaizam, 2023).

One of the most surprising and lesser-known AI uses by VE&T is AI-powered sentiment analysis and social engineering tools to manipulate public opinion and incite unrest. Advanced algorithms analyse social media trends, public sentiment, and behavioural patterns to identify societal fractures, enabling the creation of targeted disinformation campaigns that exploit existing divisions.

VE&T actors exploit sentiment analysis to create highly personalised propaganda and fake news, deepening divisions, inciting conflicts, and recruiting supporters. AI-driven natural language generation (NLG) crafts compelling narratives tailored to resonate deeply with the beliefs and perspectives of targeted audiences (Ismaizam, 2023; Wan Rosli, 2024). To evade detection, they produce multiple variants, including multilingual translations and disguising propaganda as innocuous content using AI (Tech Against Terrorism, 2023).

The case of 21-year-old Chail, who attempted to assassinate Queen Elizabeth II under the influence of a chatbot he was emotionally attached to (Singleton et al., 2023), highlights the alarming role AI chatbots and other AI-powered virtual companions can play in instilling and reinforcing VE&T ideologies.

Research by Berger & Morgan (2015) reveals that groups like IS extensively deploy bots to automate propaganda dissemination on social media, imitating human interactions by responding to messages and comments. As self-radicalisation and lone-wolf attacks rise globally, including in Malaysia, unchecked AI technologies pose a significant risk of amplifying access to online VE&T propaganda.

Uncrewed aerial systems (UAS), commonly known as drones, present another significant VE&T threat, as per UN Security Council Counter-Terrorism Committee findings (Liang, 2023). Worryingly, in Southeast Asia, IS-affiliated groups have already reportedly used drones for surveillance and reconnaissance (The Diplomat, 2021).

The anonymous and decentralised nature of cryptocurrencies, such as Bitcoin, has made them indispensable for VE&T organisations in raising and transferring funds across borders. For example, Bitcoin transactions were linked to IS in financing the 2019 Sri Lankan bombings (Gola, 2021). Moreover, by utilising AI-driven algorithms, terrorists exploit cryptocurrencies to purchase illegal weapons, forged documents, and other illicit goods and services on the dark web.

These examples of AI usage by VE&T clearly demonstrate its worrying sophistication, underscoring the urgent need for robust AI governance and advanced countermeasures to address these escalating threats effectively.

Anticipating VE&T actors’ exploitation of AI and other advanced tech and devising countermeasures is critical. For instance, if they use AI to design explosives or weapons, security agencies must leverage AI to identify and tightly regulate precursor chemicals or if VE&T groups employ AI to disguise messages within innocuous content, security forces must deploy AI to detect and decode such materials, etc.

The potential of AI and VR technologies in P/CVE

AI and Virtual Reality (VR) hold significant potential in P/CVE but remain underutilised, with VR being particularly untapped. To counter the technological advancements of VE&T actors, governments must proactively invest in developing and deploying AI and VR technologies. Key applications include content moderation, predictive analytics, counter-messaging, and emergency response training.

Tech companies often rely on automated content moderation to manage harmful content, including VE&T materials, by removing, reducing visibility, or redirecting users. Platforms like Facebook and Google utilise machine learning (ML) technologies, such as language models, to detect and filter such content. Facebook, for example, reported that over 98% of its moderation efforts are initiated by automated systems rather than user reports (Ammar, 2019). However, human expertise remains crucial, as addressing the nuanced drivers of VE&T requires context and cultural sensitivity beyond AI’s current capabilities.

Following the Global Internet Forum to Counter-Terrorism (GIFCT) model, Malaysia should collaborate with major technology companies to establish a shared industry database that streamlines cross-platform coordination in removing VE&T content, while maintaining strict data privacy standards. A shared database is also vital because automated ML-based content moderation depends heavily on robust training data. With fewer than 40,000 examples, for instance, accuracy drops significantly.

However, an overly aggressive “move fast and break things” approach risks censoring content unrelated to VE&T, potentially reinforcing extremists’ narratives of persecution. Additionally, nuances in language, such as humour and irony, pose significant challenges for automated moderation systems (UNOCT & UNICRI, 2021). As these tools are often trained predominantly in major languages, caution is essential when applying them to minority languages, particularly those spoken in South and Southeast Asia.

While AI technologies are used to craft personalised VE&T propaganda, they can also be leveraged to create highly tailored counter-narratives. These counter-messages can mimic the vernacular, tone, and style of at-risk individuals or groups (Ismaizam, 2023).

AI chatbots can be trained on data reflecting the extremist ideologies of specific VE&T groups—such as social media posts, videos, and articles—enabling them to “think” like radicalised individuals. Counter-VE&T practitioners can then utilise these chatbots to test and refine narratives, identifying the most effective strategies for promoting deradicalisation.

AI models can also predict the potential activities of at-risk individuals by analysing real-time data on their online behaviours. While predictive analytics show promise for detecting and intercepting VE&T-related activities, they are limited by the inherent unpredictability of human behaviour and raise ethical concerns about discriminatory bias and unwarranted mass surveillance.

Nevertheless, open-source-based analysis employing data anonymisation or pseudonymisation provides a viable means of identifying trends and forecasting future behaviours of VE&T groups, while adhering to data privacy principles such as those enshrined in the ASEAN Framework on Personal Data Protection.

Correspondingly, the UN has strongly emphasised the potential of Augmented Reality (AR) and VR technologies as effective P/CVE tools in border security, emergency response, deradicalisation initiatives, and criminal investigations.

Conventional counter-terrorism training such as drills has several restraints, including the absence of realistic scenarios and a lack of personalised performance feedback. In contrast, VR allows trainees to practise in a controlled and repeatable environment without exposing them to genuine danger.

For instance, a university VR Serious Game (SG) developed by Lovreglio et al. (2022) featured a counter-terrorism scenario where all 32 participants significantly enhanced their understanding of the “Run, Hide, Fight” strategy. This improvement stems from VR’s interactive and immersive environment, where users engage in tasks, make decisions, apply knowledge to real-world actions, observe outcomes, and reflect on their choices (Lamb & Etopio, 2020).

Moreover, Shipman et al. (2024) found that VR can capture movement patterns closely mirroring those observed in physical reality experiments. This suggests that VR offers a cost-effective method for data collection in emergency scenarios, providing realistic and controlled environments without the logistical challenges of physical drills.

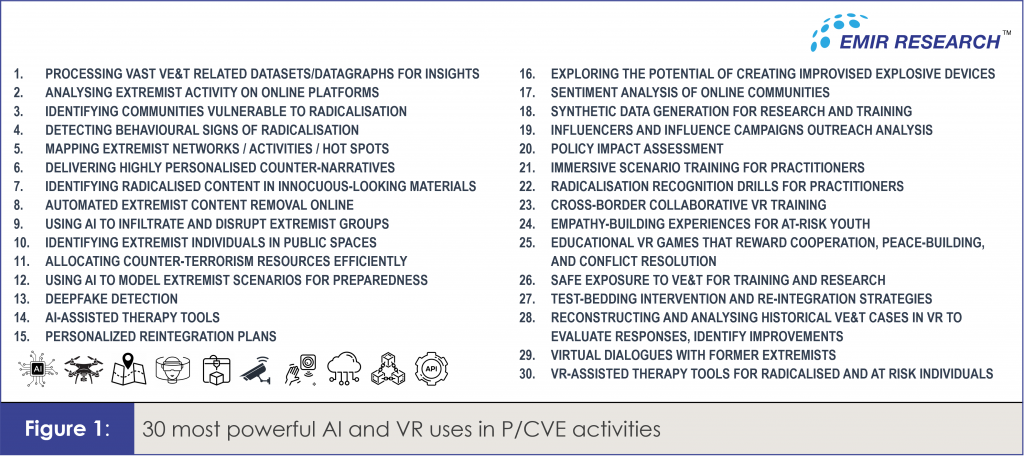

Figure 1 highlights additional innovative and impactful applications of AI and VR in P/CVE initiatives.

The just unveiled MyPCVE, Malaysia’s first official P/CVE strategy, leaves the role of AI and advanced technologies in addressing VE&T unclear, despite rightfully recognising “innovation” as a key component aligned with Madani principles.

Globally, there is a growing disproportionate focus on integrating advanced technology into P/CVE efforts, moving beyond mere buzzwords. Also, in leading AI nations, governments are often the first big customers of AI. As Malaysia seeks to lead in the AI space, it must embrace this trend and meaningfully integrate advanced technologies into P/CVE efforts and broader public service delivery.

Dr Margarita Peredaryenko and Avyce Heng are part of the research team at EMIR Research, an independent think tank focused on strategic policy recommendations based on rigorous research.